- Published on

QuikPay Revamp

- Authors

- Name

- Srinjoy Santra

- @s_srinjoy

Originially posted at BookMyShow Tech Blog

QuikPay Revamp

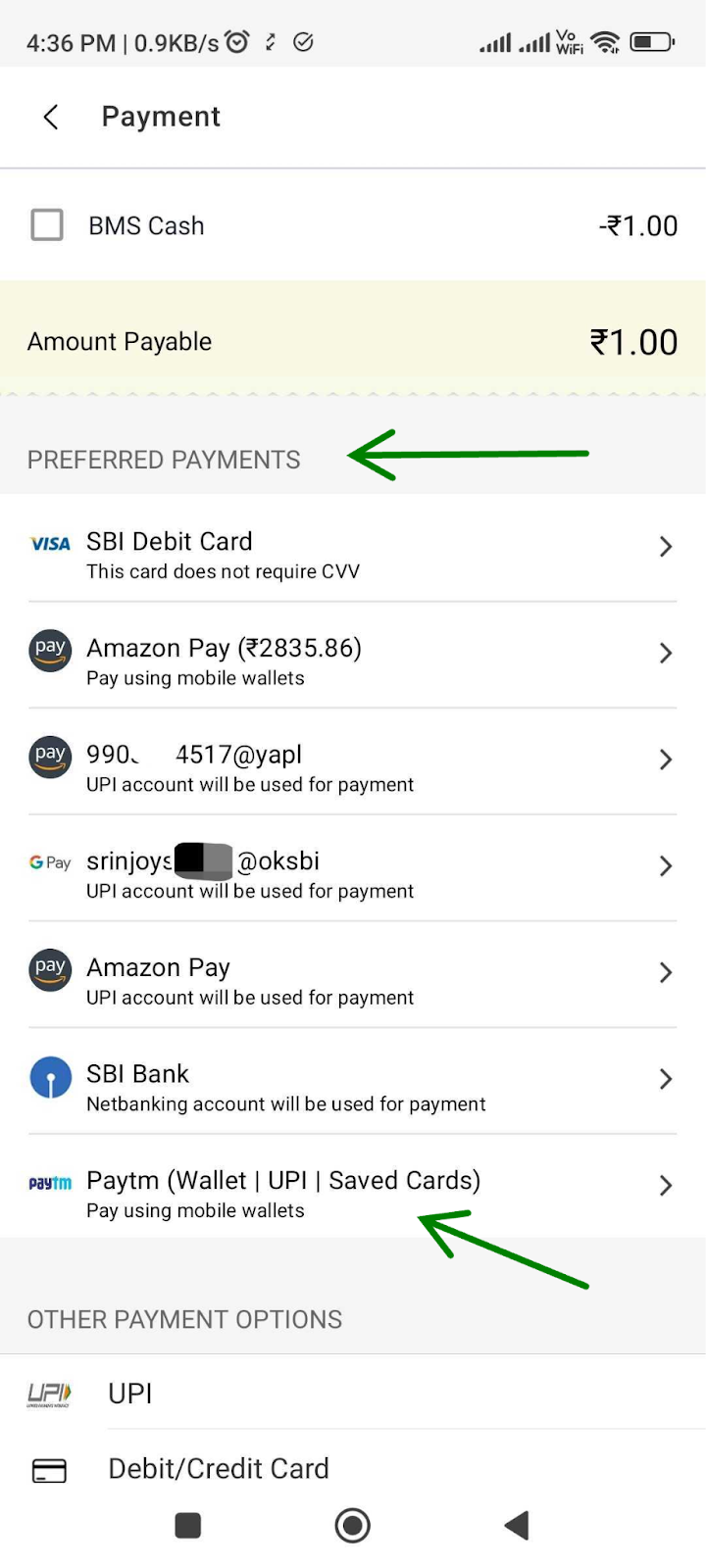

Like all major B2C companies, we have our saved payment feature which we fondly call as QuikPay. QuikPay pre-fills payment instrument data which results in faster checkouts and overall conversions. It appears on profile, order summary (OSP) and payment listing pages.

Quikpay module allows customers to save payment methods like cards, wallets, UPI (collect, intent), banks and more to save from the profile or while doing payment.

Few major ideas that stuck from the internal brainstorming were:

- The frequency of a payment instrumentΓÇÖs use should be considered in the listing.

- If a saved payment method is having low success rates, we should hide it.

- Recently unsuccessful payment instruments should not end up being shown in the listing.

Our existing systems were supporting the QuikPay list based on recency of usage i.e. last used appears higher on the list. I proposed the idea to combine both frequency and recency of usage and ranking the instruments accordingly.

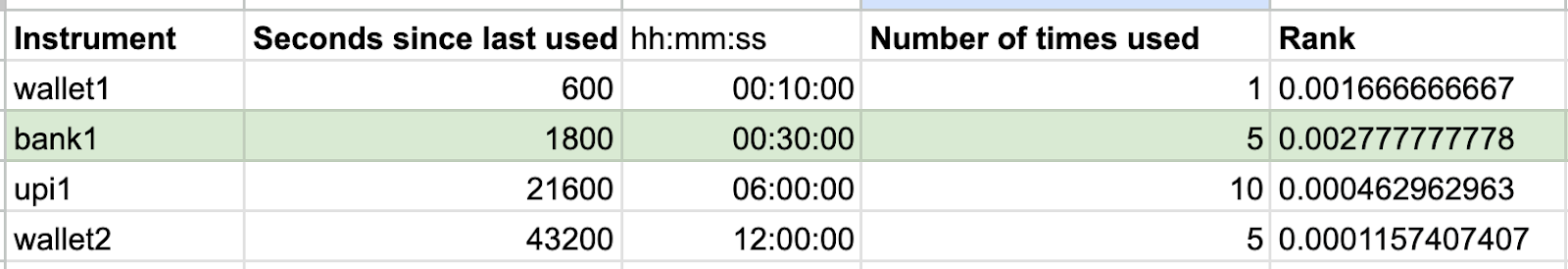

The mathematical logic is as follows:

where, = number of times an instrument is used by a particular customer = duration since an instrument is last used by a particular customer

The instruments are sorted according to access recency. With the higher rankValue, bank1 appears on top followed by wallet1.

Architecture design

After finalising the product requirements, I looked towards the possible technical debts we could solve.

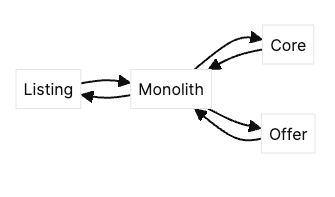

Old flow

Overview

The Core service interfaces with the payment aggregator and combines the data with the saved instrument and responds to upstream services.

Our older frontend clients used to interface with this Monolith to load QuikPay data.

A few years back, the Listing service was introduced to act as the backend for frontend.

Offer service was used to combine any offer details with the QuikPay instruments.

At the inception, the Monolith included code of Core service. Over the years, to handle more scale, Core was carved out to foster agility, resilience and maintenance.

Limitations

This introduced additional network hop of the Monolith.

Monolith used legacy encoding formats.

Monolith did not have reactive resilience policies like retries.

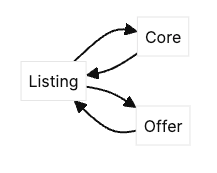

Proposed flow

Overview

- The Monolith service has been removed in the flow.

- Listing service now calls the two services:

- Core with newly exposed performant and resilient APIs.

- Offer to add offer details.

Refactor of Core service

API design

Many of our internal service calls are built on gRPC. However, gRPC requires strict versioning and schema evolution management. Breaking changes require regenerating clients, adding friction to updates. For this use-case, I decided to go with REST (HTTP) APIs to maintain flexibility (fields in JSON responses can be added or omitted without breaking consumers) because new payment methods can be frequently added with varied schema.

The read APIs

Get All

api/quickPay/instruments?in=card&in=upi&out=walletGet One

api/quickPay/instruments/:name

I allowed filtering to reduce response size and thus making the API faster. e.g. Offers Listing page uses Get All API to list all the saved cards.

Business logic

Most of our QuikPay data is stored in a mongoDB collection which we discussed in another post. Some additional data comes from our payment aggregator based on various conditions. In tandem with the proliferation of various payment instruments available in India, we kept on adding patches to support them. This led to a loss of structure and difficulties in debugging and testing. So we thought of re-architecting the entire flow.

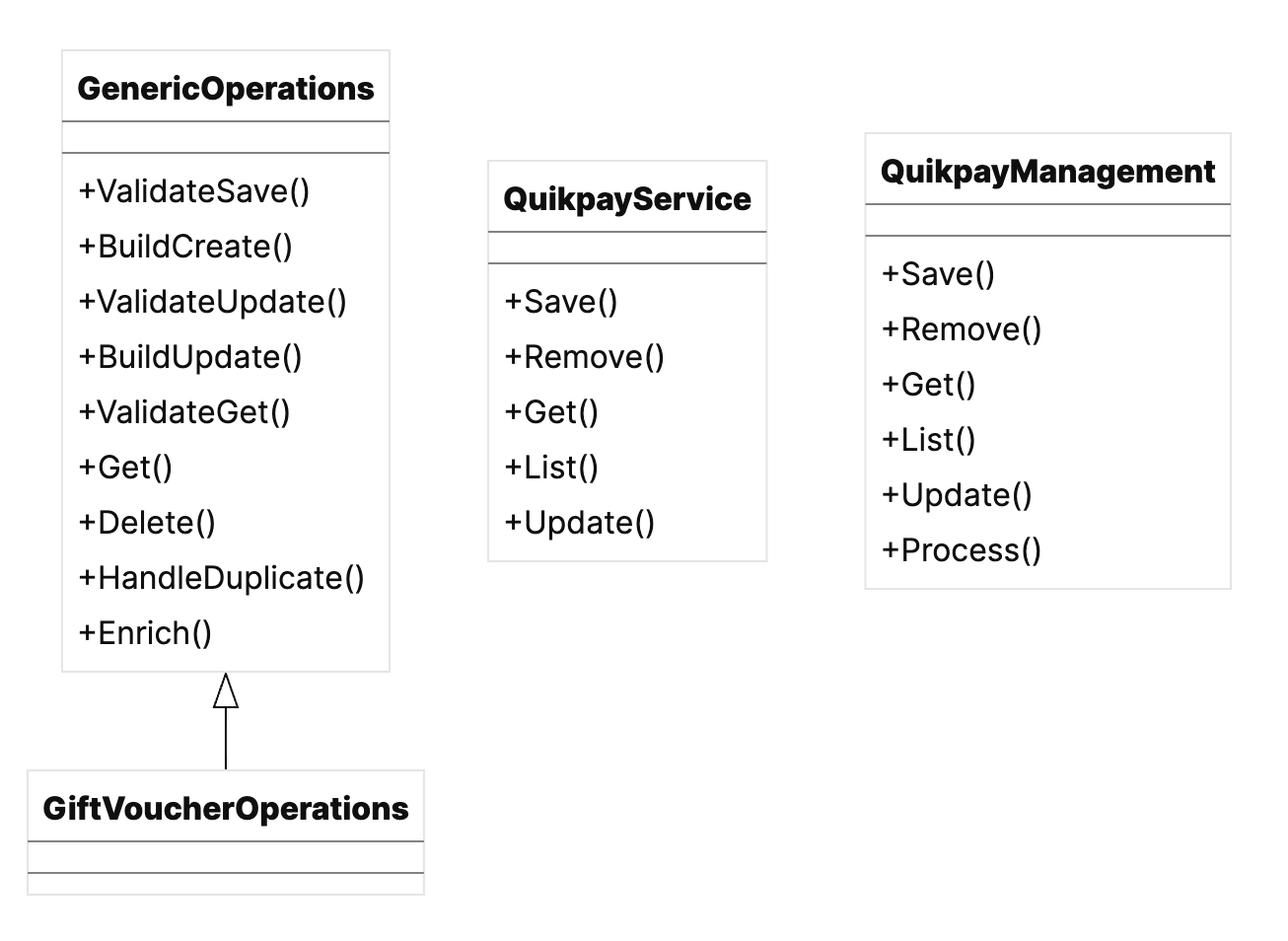

QuikpayManagement is a thin layer to receive requests from the API controllers or other objects.

It validates the authenticity of customers.

Process() method translates user selected option and pre-fills with saved data during a payment

Update() method increments the usage count among other things if the event for successful payment is received.

QuikpayService handles more lower-level tasks such as dealing with mongo client and other aggregator APIs.

Properties and functionalities of various payment instruments are different with only a few commonalities. So here I decided to implement a factory method pattern.

GenericOperations contains all the commonalities with each instrument having their own overridden implementations, like GiftVoucherOperations, WalletOperations, etc.

Resilience policies

To make the APIs performant, I introduced the following:

Reasonable timeouts if aggregator APIs are taking too long to respond.

Parallel calls to aggregators for tokenized cards along with other internal service calls.

Circuit breaking to exclude non-essential and underperforming payment instruments.

Refactor of Listing service

This service combines data from Core and Offer services.

It computes the rank and sorts the instruments accordingly.

It excludes instruments with low success rates.

It has retries and timeouts to optimise calls which serve millions of our customers daily.

For the ease of testing, I shared post-response scripts to check the rank values with our Quality Assurance team.

Results

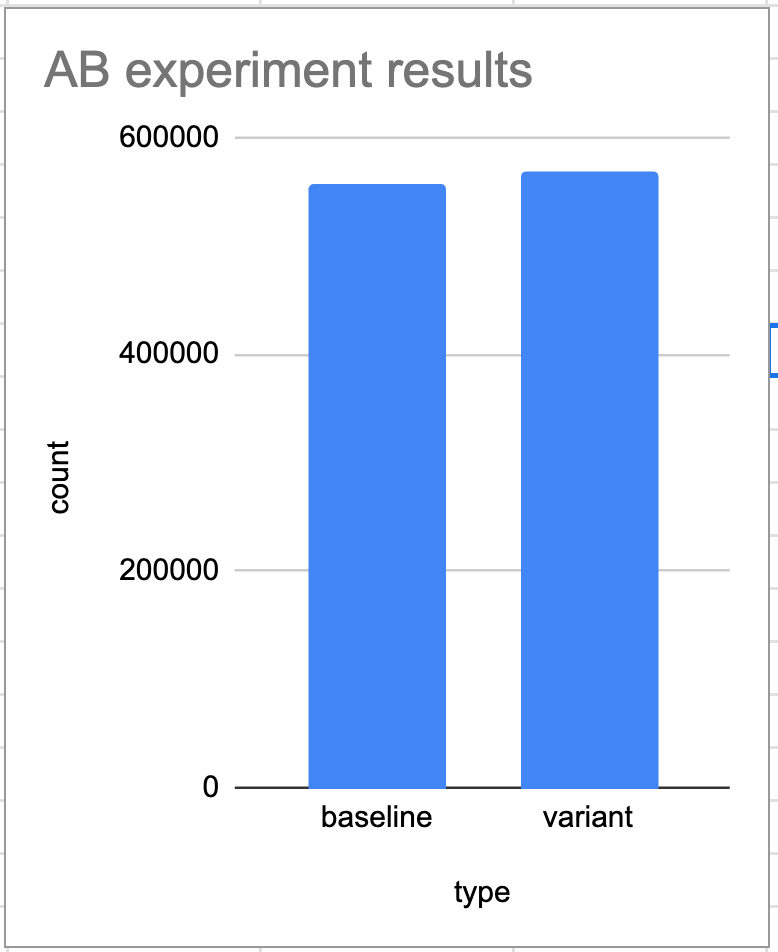

Our AB experiments validated that it led to .02% increase in conversions.

The response times to frontend reduced by 10%.

Conclusion

Navigating the complexities of modern software development requires a deep understanding of both emerging and foundational principles. Microservices, with their promise of scalability and flexibility, are transforming how we build and deploy applications. However, integrating them into a legacy codebase presents challenges that demand careful planning and execution. Undoubtedly, microservices have met our objective but some downsides include network congestion, slightly increased computing costs and troubleshooting complexity.

Thank you for reading, and we strive to continue pushing the boundaries of what is possible!